Satellite Signal Latency

Iridium - Motorola satellite mobile phone is the original Iridium

phone and the first wireless phone in history to provide total global coverage.![]()

It is often thought that light travels so quickly that the time taken for it to get from its source to its target would be irrelevant. One would thus expect communication latency to be negligible. Unfortunately, that is not necessarily the case as the following examples illustrate.

Latency in a packet-switched network is measured either one-way (the time from the source sending a packet to the destination receiving it), or round-trip (the one-way latency from source to destination plus the one-way latency from the destination back to the source). Round-trip latency is more often quoted, because it can be measured from a single point. Note that round trip latency excludes the amount of time that a destination system spends processing the packet. Many software platforms provide a service called ping that can be used to measure round-trip latency. Ping performs no packet processing; it merely sends a response back when it receives a packet (i.e. performs a no-op), thus it is a relatively accurate way of measuring latency.

Where precision is important, one-way latency for a link can be more strictly defined as the time from the start of packet transmission to the start of packet reception. The time from the start of packet reception to the end of packet reception is measured separately and called "transmission delay". This definition of latency is independent of the link's throughput and the size of the packet, and is the absolute minimum delay possible with that link.

However, in a non-trivial network, a typical packet will be forwarded over many links via many gateways, each of which will not begin to forward the packet until it has been completely received. In such a network, the minimal latency is the sum of the minimum latency of each link, plus the transmission delay of each link except the final one, plus the forwarding latency of each gateway.

Satellite transmission

Although intercontinental television signals travel at the speed of light, they nevertheless develop a noticeable latency over long distances. This is best illustrated when a newsreader in a studio talks to a reporter half way around the world. The signal travels via communication satellite situated in geosynchronous orbit to the reporter and then goes all the way back to the studio, resulting in a journey of almost one hundred thousand kilometers. Most, but not all of this latency is distance-induced, since there are latencies built into the equipment at each end and in the satellite itself. The time difference may not be much more than a second, but is noticeable by humans. This time lag is due to the massive distances between Earth and the satellite. Even at lightspeed it takes a sizeable fraction of a second to travel that distance. Low-Earth orbit is sometimes used to ameliorate this delay, but the expense of the constellation is increased to ensure continous coverage.

Satellite networks promise a new era of global connectivity, but also present new challenges to common Internet applications. In this work, we have shown that many popular Internet applications perform to user expectation over satellite networks, such as videoteleconferencing, MBone multicast, bulk data transfer, background electronic mail, and non-real-time information dissemination. Some other applications, especially highly interactive applications such as Web browsing, do suffer from the inefficiencies of the current TCP standard over high-bandwidth long-latency links. However, performance of these applications can be improved by utilizing many of the techniques discussed in this paper. In the long term, further improvements can be made at the protocol level by extending the current TCP standard, although much work needs to be done on possible extensions to ensure that they do not negatively affect the Internet as a whole.

Impact on Satellite Internet Systems

Most Internet users have heard about latency (almost always in the context of gaming) but don't really understand much about it. Latency is the delay in a single bit (or packet - on fast circuits there's not much queuing delay so there's not much difference) getting from one place to another and back. Latency is almost always the result of path selection and limitations of the speed of light. For example, if my best path to your server goes from NYC to London and back, then I will have a *minimum* latency of around 60ms and likely more like 80. Not terrible but not great.

Large latencies impact all kinds of uses of the Net, including connection set-up, interactive typing or screen-refresh and throughput. The main problem that the Boeing engineers faces is that geostationary satellites (that maintain their position above a particular spot on the earth - almost all communications satellites fit this description) are really high up. In fact, they are at least 300ms unidirectional latency all by themselves (that's aircraft->satellite->Europe).

Most communications satellites are located in the Geostationary Orbit (GSO) at an altitude of approximately 35,786 km above the equator. At this height the satellites go around the earth in a west to east direction at the same angular speed at the earth's rotation, so they appear to be almost fixed in the sky to an observer on the ground. The time for one satellite orbit and the time for the earth to rotate is 1 sidereal day or 23 h 56 m 4 seconds. Radio waves go at the speed of light which is 300,000 km per second. If you are located on the equator and are communicating with a satellite directly overhead then the total distance (up and down again) is nearly 72,000 km so the time delay is 240 ms. ms means millisecond or 1 thousandth of a second so 240 ms is just under a quarter of a second. A satellite is visible from a little less than one third of the earth's surface and if you are located at the edge of this area the satellite appears to be just above the horizon. (see my satellite dish pointing calculator for slant range and azimuth and elevation angle calculations ). The distance to the satellite is greater and for earth stations at the extreme edge of the coverage area, the distance to the satellite is approx 41756 km. If you were to communicate with another similarly located site, the total distance is nearly 84,000 km so the end to end delay is almost 280 ms, which is a little over quarter of a second. Extra delays occur due to the length of cable extensions at either end, and very much so if a signals is routed by more than one satellite hop. Significant delay can also be added in routers, switches and signal processing points along the route.. TCP/IP Acceleration: The use of the TCP/IP protocol over satellite is not good and a number of companies have developed ways of temporarily changing the protocol to XTP over the satellite link to achieve IP acceleration. See http://www.packeteer.com/resources/?attrvalue=75 for an example

Other Iridium Phones:

Satellite Internet Explained In Plain English: Latency

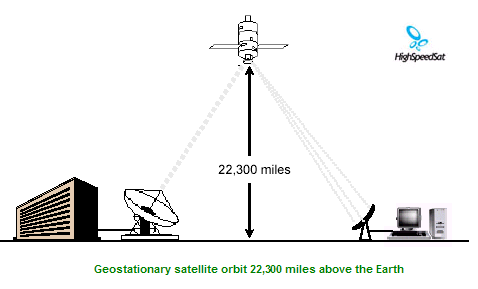

Latency is the amount of delay, measured in milliseconds, found in a round-trip data transmission. Not directly related to speed, latency can be an issue with all networks including satellites. Latency is caused by several factors, including the number of times the data is handled along the transmission path, for instance, by a router or server. Each time a data packet is handled by a device along the path (called a “hop”) several milliseconds of latency are induced. More importantly in the satellite world, latency is caused by the distance that the signal must travel. The satellites used for two-way Internet service are located approximately 23,000 miles above the equator. This means a round-trip transmission travels 23,000 miles to the satellite, 23,000 miles from the satellite to the remote site, and then as the TCP/IP acknowledgment is returned, another 46,000 miles on the return trip. That's a total round trip of about 92,000 miles. Even at the speed of light, this accounts for more delay (in milliseconds) than found in a terrestrial network. Most Internet applications including web browsing, email, FTP etc. work in their normal manner even when traversing this long distance and user experiences are very positive. Certain applications, such as Voice-Over-IP are affected but also work. Some applications, such as online gaming are not recommended. Citrix, and terminal emulators without local-echo, can also be affected by latency depending on the underlying application and configuration. If you have an application that is particularly sensitive to latency, it is highly recommended that customers check with their software vendor to confirm how specific applications are affected.

VSAT Systems connects the secure traffic between your private satellite network and your companies headquarters network across a variety of customer-selected options including point-to-point T-1, Frame Relay PVC or VPN across the Internet. Since the Internet method is by far the least costly, it is the most popular.

This technology approach completely avoids the performance problems of VPN-over-satellite because there is no traditional VPN being used across the satellite portion of the connection.

TCP/IP over Satellite

It takes time for a satellite signal to be sent from your dish to the orbiting satellite back down to Earth… The speed of light is 186,000 miles per second and the orbiting satellite is 22,300 miles above earth. Calculated out, satellite latency is roughly ˝ of a second, which is not a lot of time, but a few applications such as VPN (Virtual Private Network) don’t like this time delay. It is important to know if Satellite Latency will affect the way you will use the Internet.

A MISCONCEPTION ABOUT SATELLITE LATENCY

A common misconception is that latency has an effect on transfer rate. This is not true. A one Megabyte file will transfer just as quick over a 1000Kbps satellite connection as it does over a 1000Kbps terrestrial connection. It just takes the satellite connection less than a second for the file to begin transferring.

Speed of electro-magnetic waves

The speed of light also has some profound implications for networking technology as well. Light, or electromagnetic radiation, travels at 299,792,458 meters per second in a vacuum. Within a copper conductor the propagation speed is some three quarters of this speed, and in a fibre optic cable the speed of propagation is slightly slower, at two thirds of this speed.

Why is this relevant to network performance?

While it may take only some nanoseconds to pass a packet across a couple of hundred meters within a local area network, it takes considerably longer to pass the same packet across a continent, or across the world. In many parts of the world Internet services are based on satellite access. Often these satellite-based services compete in the market with services based on terrestrial or undersea cable. And even with undersea cables, the precise path length used can also vary considerably.

What's the difference?

The essential difference is "latency", or the time taken for a packet to traverse the network path. Latency is a major factor in IP performance, and reducing or mitigating the effects of latency is one of the major aspects of network performance engineering. And the time taken to get a packet through a network path is dependant on the length of the path and the speed of propagation of electromagnetic radiation through that medium. And this delay becomes a very important factor in network performance.

Why is Network Latency so Important for TCP/IP

In order to understand why this is the case it is appropriate to start with the IP protocol itself. IP is a 'datagram' protocol. For data to be transferred across an IP network the data is first segmented into packets. Prepended to each packet is an IP protocol header. IP itself makes remarkably few assumptions about the characteristics of the underlying transmission system. IP packets can be discarded, reordered or fragmented into a number of smaller IP packets and remain within the scope of the protocol. The IP protocol does not assume any particular network service quality, bandwidth, reliability, delay, variation in delay or even reordering of packets as they pass through the network.

In itself this unreliable services sounds like its not overly useful. If sending a packet through an IP network is an exercise in probability theory rather than a reliable transaction, then how does the Internet work at all? If we want reliable service then we need to look at a protocol that sits directly on top of IP in a stack-based model of the network's architecture. The real work in turning IP into a reliable networking platform is undertaken through the Transmission Control Protocol, or TCP. TCP works by adding an additional header to the IP packet, wedged between the outer IP packet header and the inner data payload. This header includes sequencing identifiers, fragmentation control fields and a number of control flags.

TCP is a reliable data transfer protocol. The requirements for reliability in the data transfer implies that TCP will detect any form of data corruption, loss, or reordering on the part of the network and will ensure that the sender retransmits as many times as necessary until the data is transferred successfully. The essential characteristic of TCP is that the sender keeps a copy of every sent packet, and will not discard it until it receives an acknowledgement (ACK) from the other end of the connection that the packet has been received successfully.

Of course sending data packet by packet and waiting for an acknowledgement each time would be a very cumbersome affair. TCP uses 'sliding window control' to send data. By this, it is meant that the sender sends a sequence of packets (a 'window'), and then holds a copy of these packets while awaiting ACKs from the receiver to signal back that the packets have arrived successfully. Each time an ACK for new data is received, the window is advanced by one packet, allowing the sender to send the next packet into the network. The packets are numbered in sequence so that the received can resort the data into the correct order as required.

TCP also uses a form of adaptive rate control, where the protocol attempts to sense the upper limit of sustainable network traffic, and drive the session at a rate that is comparable to this maximal sustainable rate. It does this though dynamically changing the size of the sliding window, coupled with monitoring of packet loss. When TCP encounters packet loss, as signalled by the ACK return packets, it assumes that the loss is due to network congestion, and the protocol immediately reduces its data transfer rate and once more attempts to probe into higher transfer rates. This control is undertaken in TCP by adjusting the size of the sliding window. The way in which TCP adjusts its rate is by increasing the window each time an ACK for new data is received, and reducing the window size when the sender believes that a packet has been discarded.There are two modes of window control. When starting a TCP session a control method called "slow start" is used, where the window is increased by one packet each time an ACK is received. If you get an ACK for every sent packet, then the result is that the sender doubles its window size, and hence doubles its data transfer rate, for each time interval required to send a packet and receive the matching ACK. Networking is not a science of the infinite, and a sender cannot continuously increase its sending rate without limit. At some stage the receiver will signal that its receiving buffer is saturated, or the sender will exhaust its sending buffer, or a network queue resource will become saturated. In the last case this network queue saturation will result in packet loss. When TCP experiences packet loss the TCP sender will immediately halve its sending rate and then enter "congestion avoidance" mode. In congestion avoidance mode the TCP sender will increase its sending rate by one packet every round trip time interval. Paradoxically, this rate increase is typically very much slower than the "slow start" rate.

For TCP, the critical network characteristic is the latency. The longer the latency, the more insensitive TCP becomes in its efforts to adapt to the network state. As the latency increases, TCP's rate increase becomes slower, and the traffic pattern becomes more bursty in nature. These two factors combine to reduce the efficiency of the protocol and hence the efficiency of the network to carry data. This leads to the observation that, from a performance perspective and from a network efficiency perspective, it is always a desirable objective to reduce network latency.

Of course these always a gap between theory and practice. The practical consideration is that TCP will only run as fast as the minimum of the size of the sending and receiving buffers in the end systems. On longer delay paths in the Internet its not the available bandwidth in the network thats the bottleneck, its the performance of the end system coupled with the network latency that limits performance.